What "optimism" does to "apollo-cache-inmemory"

September 06, 2019

apollo-cache-inmemory(InMemoryCache) is the recommended cache implementation for Apollo Client. It caches your GraphQL response by breaking up a GraphQL query result into its constituent schema objects and stores it in a map.

One of the key characteristics of

InMemoryCacheis that it normalizes your data before saving it to the store by splitting the result into individual objects, creating a unique identifier for each object, and storing those objects in a flattened data structure. By default,InMemoryCachewill attempt to use the commonly found primary keys for the unique identifier if they exist along with__typenameon an object.(known asdataIdFromObject)

By normalizing the result objects, InMemoryCache can reduce the cache size for large amounts of repeated data nested in a different query tree. It also allows the cache to return partial results for other queries, reducing the overall number of network calls.

This cache structure gives us a great benefit and we (product developers) can always assume that if Query 2 watches the same node then both of them will always be synced.

Let’s say that you request this hypothetical GetRepositry query (“Query 1”) to GitHub and get the following response:

# Query 1

query GetRepositry {

repository(owner: "apollographql", name: "apollo-client") {

id

__typename

name

assignableUsers(first: 2) {

node {

id

__typename

name

}

}

}

}{

"data": {

"repository": {

"id": "1",

"__typename": "Repository",

"name": "apollo-client",

"assignableUsers": [

{

"node": {

"id": "1",

"__typename": "User",

"name": "Ben"

}

},

{

"node": {

"id": "2",

"__typename": "User",

"name": "Hugh"

}

}

]

}

}

}As you can see, you would get the apollo-client repository data with 2 assignable users (assignableUsers: a list of users that can be assigned to issues in this repository).

When caching the response, InMemoryCache breaks up the response and hoists up each node (“Repositry:1”, “User:1” and “User:2”) as shown below:

{

"Repository:1": {

"__typename": "Repository",

"id": "1",

"name": "apollo-client"

},

"User:1": {

"__typename": "User",

"id": "1",

"name": "Ben"

},

"User:2": {

"__typename": "User",

"id": "2",

"name": "Hugh"

},

"ROOT_QUERY": {

"repository": {

"type": "id",

"id": "Repository:1",

"assignableUsers": [

{

"id": "User:1",

"type": "id",

"__typename": "User"

},

{

"id": "User:2",

"type": "id",

"__typename": "User"

}

]

}

}

}The process is shown in the following animation:

As you can see, normalization gives you the assurance that the values will always be the same if 2 different queries are watching the same node.

To clearly see the benefit, let’s say that you fire up another query (“Query 2”) to fetch the react-apollo repository data. You would get the id, the name of the repository, and the same 2 assignable users. Note that after you requested “Query 1”, Ben (“User:1”) changed his name to “Benj”.

Here’s how the cache normalization works for “Query 2” response:

In the animation above, you can see that the same normalization process occurs and it updates “User:1”’s name. “Query 1” was watching the changes in “User:1” as well as the other relevant nodes in the normalized cache. It gets an update and synced by the broadcasted changes from “User:1“‘s update.

Although this is only a rough description of what occurs, you can easily see why we can make the assumption that if 2 queries watch the same node, both of them are always synced

Normalization allows us to scale the app without having to worry about manually updating the cache every time any changes happens.

Trade-Offs

In exchange for this benefit, due to the nature of the normalization data structure and to ensure the exactness of the cache, when you want to get a query’s cached result, it has to reconstruct the data from the normalized graph.

In the example above, in order to dispatch the broadcasting event reliably, every time related nodes have any update, it has to compute the previous result in order to compare it to the current result so that broadcastings happen only when there is a change. This computation of previous results had been a performance bottleneck as it happened very frequently, but now it only happens when the related node has an update and exact computation is necessary. “optimism” enables us to reliably reuse the previously computed result objects, instead of re-computing them from scratch each time.

This is one of the performance sensitive part of the cache because it takes parsing the query document to get each item with the requested fields from the cache to complete the query result.

This also happens when you want to manually update the query cache such as client.readQuery & client.writeQuery. It is used very often in real world apps if you want to take advantage of optimistic UI. Every time you set the optimisticResponse in a single mutation and directly update the cache, those updates run twice, once with the optimistic and once with the real response from the server. (Optimistic data handling is a difficult part of cache maintenance and it deserves another post.)

Let’s say you want to get the cached response of “Query 2” from the InMemoryCache, since it doesn’t have the exact query cache tree for “Query 2”, it has to re-compute it in order to return the cache.

client.readQuery({

query: gql`

query GetRepositry {

repository(owner: "apollographql", name: "react-apollo") {

id

__typename

name

assignableUsers(first: 2) {

node {

id

__typename

name

}

}

}

}

`,

})You can see how the query cache tree is constructed per readQuery request. If you imagine that this construction happens every time to compare the previous request and the current one on every watched query, it is easy to understand that this is a very performance-sensitive part of the cache.

The good news is that there’s a library called “optimism” introduced to InMemoryCache to reduce this performance issue.

“optimism” to the rescue

optimism is a memorization library and it caches the result of a function so that the function’s repeated calls could become nearly instantaneous from the second time. It also provides the option of how to invalidate the cache so that it will become a “fresh” call the next time the function gets called,

Here’s how you can use the wrap from optimism which receives the “original function” as the first param and “caching option” as the second param:

import { wrap, defaultMakeCacheKey } from 'optimism'

const cachedFunction = wrap(function originalFunction(a, b) {

// Original function body...

}), {

// Maximum number of cached values to retain.

max: Math.pow(2, 16),

// Optional function that can be used to simplify (or reduce dimensionality) of the input arguments.

makeCacheKey(a, b) {

// Return a value that could be used as a key in a Map.

// Returning nothing (undefined) forces the actual function

// to be called, skipping all caching logic.

// The default behavior works even if a or b are objects:

return defaultMakeCacheKey(a, b);

}

// Optional function that can be used to observe changes that

// might affect the validity of the cached value.

subscribe(a, b) {

const watcher = fs.watch(a, () => {

// Invalidates the cached value for these arguments, after

// transforming them with makeCacheKey. Idempotent.

cachedFunction.dirty(a, b);

};

return () => watcher.close();

}

...

}optimism is used for InMemoryCache to remember each node so that it doesn’t have to construct the whole query cache tree every time it’s needed. By caching each node, it returns the previous results immediately (including nested result objects, not the top-level result), without any unnecessary re-computation, as long as the underlying data ids involved in the original computation have not been modified in the meantime. It’s also taken care of when to “dirty” the node so that the next time the node needs to be calculated it will be a “fresh” call.

Let’s look at how optimism is applied in the codebase. In case of checking whether to run broadcastings, InMemoryCache#diff is the entry point and responsible for checking whether the broadcasting is necessary or not, every time the store has up update. If there is, it fires broadcast.

// inMemoryCache.ts

// This method is wrapped in the constructor so that it will be called only

// if the data that would be broadcast has changed.

private maybeBroadcastWatch(c: Cache.WatchOptions) {

c.callback(

this.diff({

query: c.query,

variables: c.variables,

previousResult: c.previousResult && c.previousResult(),

optimistic: c.optimistic,

}),

);

}It also internally calls readFromStore#diffQueryAgainstStore to get the diff from the cache.

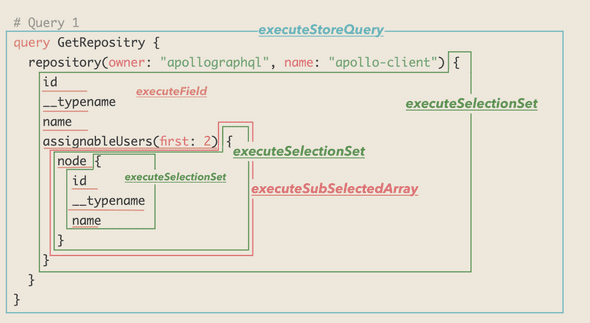

Then readFromStore#diffQueryAgainstStore leads to 4 different execute methods accordingly to finally get the cached result.

Each of those is responsible for the whole query, selection sets, scalar value fields, and scalar value fields of an array.

Of those 4 methods, executeStoreQuery, executeSelectionSet and executeSubSelectedArray are memoized based on the params which can uniquely identify the same context of the computation.

(Fun fact: executeField isn’t memoized. It can be cached though, getting the cached value and getting the actual value isn’t that different in performance if it is a scalar value. On top of that, it still takes up the memory if cached. So if the performance doesn’t change that much, caching isn’t worthwhile. Nothing comes free.)

So once we have those memoized functions, what we want to do is to track them.

There’s DepTrackingCache, where all those dependencies are managed.

import { wrap } from 'optimism';

type OptimisticWrapperFunction<T extends any[], TResult> = ((

...args: T

) => TResult) & { dirty: (...args: T) => void; };

export class DepTrackingCache implements NormalizedCache {

// Wrapper function produced by the optimism library, used to depend on

// dataId strings, for easy invalidation of specific IDs.

private depend: OptimisticWrapperFunction<[string], StoreObject | undefined>;

constructor(private data: NormalizedCacheObject = Object.create(null)) {

this.depend = wrap((dataId: string) => this.data[dataId], {

disposable: true,

makeCacheKey(dataId: string) {

return dataId;

},

});

}

public get(dataId: string): StoreObject {

this.depend(dataId);

return this.data[dataId]!;

}

public set(dataId: string, value?: StoreObject) {

const oldValue = this.data[dataId];

if (value !== oldValue) {

this.data[dataId] = value;

this.depend.dirty(dataId);

}

}

public delete(dataId: string): void {

if (hasOwn.call(this.data, dataId)) {

delete this.data[dataId];

this.depend.dirty(dataId);

}

}

...

}In the get method, DepTrackingCache starts tracking the dataId(dataIdFromObject) so that it returns the cached value the next time when the memoized functions that were described above are called.

Also, later when the same dataId’s data has a new value via either set or delete method, DepTrackingCache invalidates(dirty) the dataId, and the next time you want the data of the dirtied dataId via memoized functions, it will become a “fresh” call and compute the updated values.

(Spoiler: In the next version of apollo-cache-inmemory, DepTrackingCache is replaced with EntityCache. But the idea is the same, it got even better with the separation of concerns)

This is how it all done in one PR by Ben Newman.

Here’s his tweet about it. As you can see, the result is extraordinary!

Bouncing back from a distant last place, the @apollographql InMemoryCache is now neck-and-neck with Hermes on @convoyteam's own benchmark suite, which means the two fastest #GraphQL caching systems are both implementations of the ApolloCache interface: https://t.co/mVXPiJiuxe pic.twitter.com/nzExPZ1Phf

— Ben Newman (@benjamn) September 27, 2018

You can also watch Ben’s talk at GraphQL Summit.

What’s even more exciting is that there is more to come from the Apollo Team! There is continuous exploration in GraphQL caching. If you want more information, this link is pretty helpful.